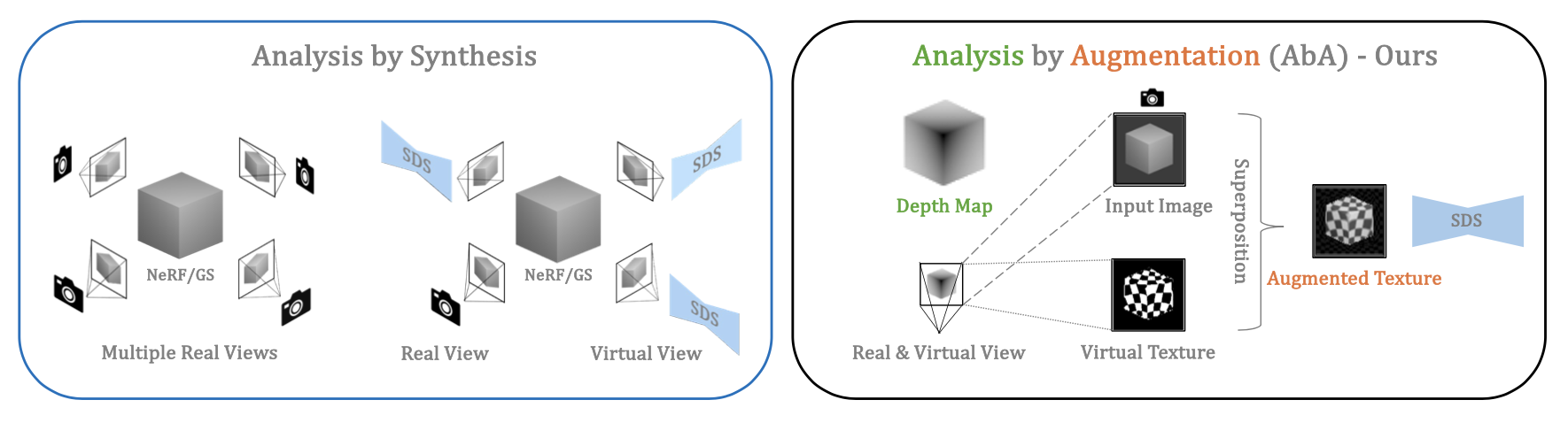

DreamFusion established a new paradigm for unsupervised 3D reconstruction from virtual views by combining advances in generative models and differentiable rendering. However, the underlying multi-view rendering, along with supervision from large-scale generative models, is computationally expensive and under-constrained.

We propose DreamTexture, a novel Shape-from-Virtual-Texture approach that leverages monocular depth cues to reconstruct 3D objects. Our method textures an input image by aligning a virtual texture with the real depth cues in the input, exploiting the inherent understanding of monocular geometry encoded in modern diffusion models. We then reconstruct depth from the virtual texture deformation with a new conformal map optimization, which alleviates memory-intensive volumetric representations.

Our experiments reveal that generative models possess an understanding of monocular shape cues, which can be extracted by augmenting and aligning texture cues—a novel monocular reconstruction paradigm that we call Analysis by Augmentation.

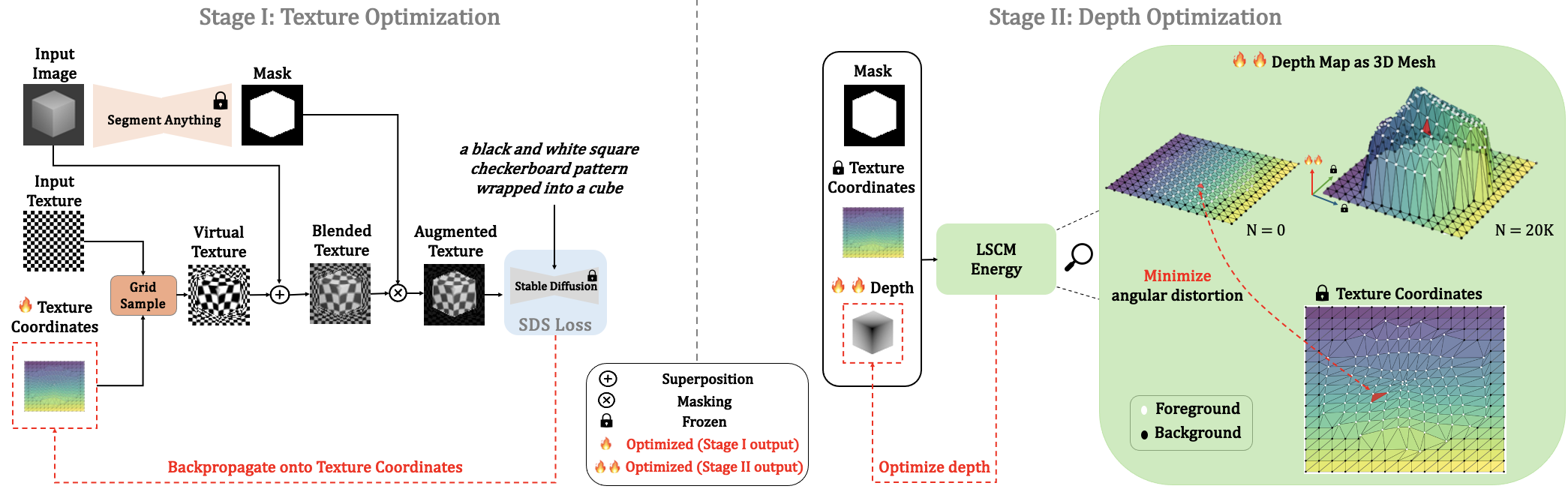

DreamTexture follows a two-stage pipeline.

In Stage I, we use the SDS loss to faithfully augment the texture on the input image, producing texture coordinates that align the virtual texture with depth cues in the input image.

In Stage II, we apply shape from virtual texture by viewing the depth map as the z-coordinates of a 3D mesh and optimize it using the LSCM energy, minimizing angular texture distortion.

DreamTexture reconstructs depth with higher fidelity, achieves smoother surface normals, and generates more accurate novel views compared to DreamFusion (DF) and RealFusion (RF) while being faster.

For more works on similar tasks, please check out

threestudio which is a unified framework for 3D content creation from text prompts, single images, and few-shot images, by lifting 2D text-to-image generation models.

@article{bhattarai2025dreamtexture,

title={DreamTexture: Shape from Virtual Texture with Analysis by Augmentation},

author={Ananta R. Bhattarai and Xingzhe He and Alla Sheffer and Helge Rhodin},

year={2025},

url={https://arxiv.org/abs/2503.16412}

}